Introduction

The emotional expressions are important to improve their social interaction with humans. The most commonly used is facial expressions, which stimulate the human sense of sight. However, the use of modalities that stimulate the other human senses are less numerous. To enrich them, this work aims to study a modal that diverges from those conventionally used: the body temperature of the robot.

Objective

This works aims to investigate the perception of the emotional expression and the internal emotional state of a robot that simultaneously uses its face and the temperature of its body as emotional modalities.

Methodology

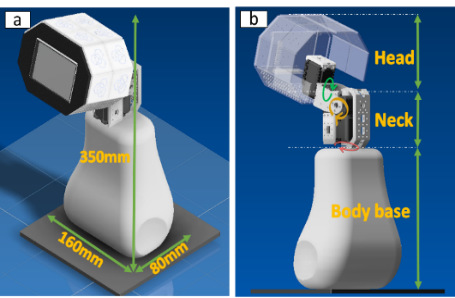

To address this research question, the robot TherMoody was used as a platform (See Fig.1). TherMoody stands out from others for its ability to change the temperature of its body, specifically, in its head, from cold to hot temperatures. Its thermal system, composed of thermoelectric modules, is located in its head. TherMoody can achieve temperatures from 10- 55°C. Further details of the design process and its validation can be found in [1] and [2].

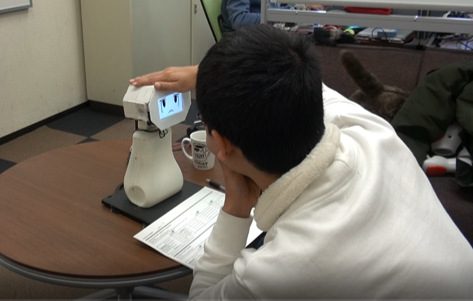

Then, 25 combinations (5×5: one facial expression and one thermo-emotional expression for anger, joy, fear, sadness and neutral state) were evaluated by fifteen participants (See Fig 3). Thermo-emotional expressions were designed based on the metaphors of emotion related to temperature.

Results

- Robot’s emotional expression: People base their judgment exclusively in robot’s facial expression, regardless of the change of its body temperature.

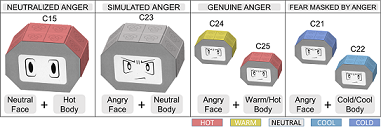

- Robot’s emotional expression: In the judgment, there are combinations where the thermo-emotional expression predominates over the facial expression. Thus, there are combinations that produce the perception that the robot’s emotion is, in fact, genuine, simulated, masked or neutralized.

As a way to visualize the results of Table 3, Figure 9 illustrates the perception of how the expression of anger is regulated through the combination of facial and thermo-emotional expressions.

Practical applications

Based on the results, TherMoody can regulate the perception of its emotions by combining facial and thermal expressions. Therefore, through this capability, a robot can react reaction to the same event in different ways. Moreover, this emotional behavior could be consistent with the personality of the robot.

Publications

- Denis Peña, Fumihide Tanaka: Validation of the Design of a Robot to Study the Thermo-Emotional Expression, Proceedings of the 10th International Conference on Social Robotics (ICSR 2018), pp.75-85, Qingdao, China, November 2018 [pdf, 589KB]

- Denis Peña, Fumihide Tanaka: Touch to Feel Me: Designing a Robot for Thermo-Emotional Communication, Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (HRI 2018), pp.207-208, Chicago, USA, March 2018 [free pdf available at ACM DL here, 681KB]

(Updated on June 24, 2019)